I’m back from ILC 09, slowly digesting all i lived there. This was my first Lisp conference and my first visit to MIT, a place marked with red big letters in the atlas of my private mythology. And it wasn’t only about places: suddenly realizing that you’re sitting next to Richard Greenblatt, or enjoying Gerry Sussman’s talks in the flesh, was quite an experience, with an almost eerie feeling attached to it.

I’m back from ILC 09, slowly digesting all i lived there. This was my first Lisp conference and my first visit to MIT, a place marked with red big letters in the atlas of my private mythology. And it wasn’t only about places: suddenly realizing that you’re sitting next to Richard Greenblatt, or enjoying Gerry Sussman’s talks in the flesh, was quite an experience, with an almost eerie feeling attached to it.

There’s a problem in meeting your myths: real life is almost never up to the task of meeting one’s idealizations. But this was not the case; i enjoyed every minute, not a tinge of disappointment to be felt. Lisp has been around for quite a while and its history is an important part of computer science’s history. That history comes life in the ILC, and you get a chance to share with the people that were there back in the day, the people you read about in books… i’ve wished many times i was there, and these days in Cambridge i’ve been as close to those halcyon days as i can expect to ever be. Living history, what a feeling.

Had i to single out just one speaker, that’d have to be Gerry Sussman. I just kept finding myself resonating in a deep way with his thoughts. For instance, during the panel on the future of Lisp, conversation revolved around how to keep the language popular and apt for commercial applications. Gerry stepped out to point that that was all very well, but that we shouldn’t forget that one of the key aspects of programming languages is to what extent they allow us to extend our problem-solving abilities by providing new ways of expressing and talking about problems (as Dijkstra once said: Lisp has assisted a number of our most gifted fellow humans in thinking previously impossible thoughts). Dead on, if you ask me, although unfortunately nobody seemed to have anything else to add, and the debate returned to the far less interesting popularity issues (well, yes, the conference wasn’t perfect after all).

Next day we were in a kind of tongue-in-check debate provocatively entitled Are macros a menace?. Richard Gabriel was on the wrong side, and arguing along the lines that macros were akin to language design and that he’d rather not suffer the consequences of letting your average software engineer undertake such a complex task. Gerry’s intervention at this point made me nod again: if we cannot trust our software enginneers to proficiently use the tools of our trade, there must be something wrong in the way we educate them; only those able to judiciously use them should get a diploma, to begin with. That’s exactly how i felt during my period as a CS teacher, as i tried, rather clumsily, to explain in one of my first rants in this blog. It feels great to be in such a good company.

Then there was this unplanned mini-talk on why MIT has replaced the SICP-based introduction to programming with something about robots using Python. You can read a nice synopsis of the reasons Sussman gave in Andy’s blog (together with summaries and comments of many other talks). It was nice in a kind of sad way: at the end, while answering a question, Gerry mentioned that this new computing world was not his, and it wasn’t one that he liked [0]. ‘But’, he said, ‘that’s because we’re old farts’. Although i’m younger than him, i like much better the kind of world that gave rise to SICP, and spent the rest of the evening feeling like a sad, old fart. I must say, however, that the ideas SICP grew upon, that simple world where you built up complexity out of small pieces and system that you understood completely, have much to offer to new generations. We should not dismiss them in the name of modernity.

Besides those cameos, Gerry had two official appearances in the regular conference programme, namely, one invited speech on robust systems design and a lightning talk. (The latter were 5-minutes long presentations–with Guy Steele and his chronometer strictly ensuring that that was actually the case–followed by a 2 minutes Q&A part, while the next speaker was setting up her laptop. This formula worked great most of the time, forcing the presenters to squeeze their brains in order to capture as much content, sense and, in most cases, fun as possible in such a short time. We had a living confirmation that working under severe constraints is a great creativity boost.)

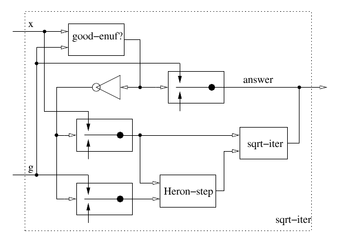

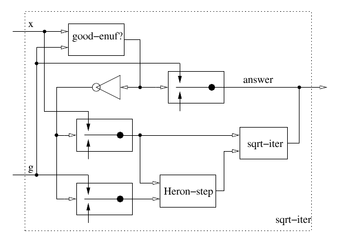

In the invited speech, we had the opportunity of hearing more about Gerry’s ideas on what makes a system robust. I say ‘more’ because he made public a paper on the subject some time ago, and, actually, his ideas on these issues can be traced back to, for instance, the SICP video lectures, where he already exposes the general strategy to tackle the problem: in order to make a system robust, you don’t want to solve a strict, narrowly specified problem, but a whole family of them (or, if you have a very crisp specification, a class of problems in its neighbourhood). That way, when the problem to be solved varies in small ways, your whole solution accommodates to the new situation by small variations. The solution is not brittle. To attain such a flexible behaviour, we need to build our system upon components that are lenient on the inputs they accept and as sharp as possible in the outputs they produce. Ways to attain the above goals include metalinguistic abstraction (creating a language tailored to the problem domain), use of generic interfaces, degeneracy or non-deterministic search in the solution space.

Generic functions were nicely demonstrated with examples from the library used in SICM (and the delicious Functional Differential Geometry). We got the warning that using generics this way is dangerous. But they’re powerful, and one needs powerful methods to solve challenging problems; one needs to know what one’s doing, but that’s part of what we’re supposed to be good at, right? Sussman kept smiling and saying ‘beware, this is a very dangerous thing to do’. There was also an almost off-the-record comment to the effect that one of the missed opportunities in Lisp’s design was its not defining all its primitive forms as generics (converting it definitely in the most dangerous language around).

Degeneracy (using completely different ways for computing the same result) was illustrated, as much of Sussman’s thinking on robust programming, by examples drawn from biological systems (in this talk, it was frogs most of the time). You can find many other examples of this sort of parallelism between computing and biological systems in the paper linked above, a line of thought that i find mixes well with the handful of metaphors i use to reason about my job as a programmer. In particular, it sort of connects with my bias towards living systems such as Lisp or Smalltalk’s, where one is evolving more than designing and implementing the program; which in turn mixes well with the ideas of latent typing and late binding, present in those highly dynamic environments (Self, Factor, and APL (as demonstrated in this game of life video (unintended pun intended)) are in the same league). Much more than with beautiful but extremely rigid ones based on static typing, such as Haskell’s. (That’s why i keep coming back to Lisp after my Haskell excursions, or why i find R6RS so disappointing. Or, if you’ll pardon my keeping on mixing methaphors, why i prefer healing rather than practising autopsies.)

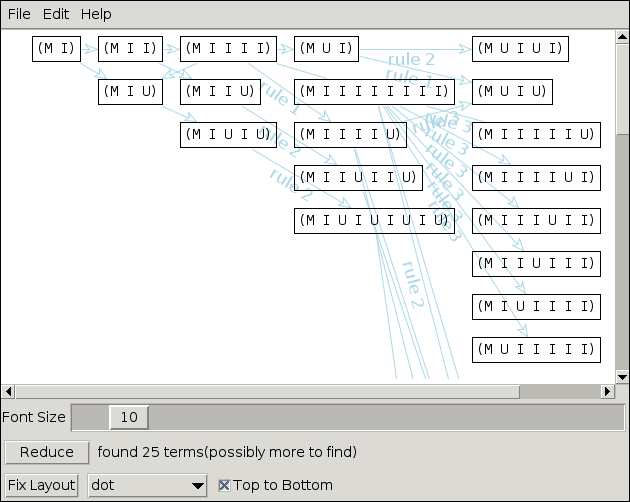

Another venue to flexibility mentioned in the talk are constraint propagation networks in which multiple sources contribute to defining the values of each state variable. You get that way the possibility of partially defined values, that can be nonetheless useful by themselves, depending on the computation you’re performing. Propagator networks also work as additive computation machines able to refine coarse inputs into correct solutions for problems specified as a set of constraints. One of Sussman’s students, Alexey Radu, is actively working on propagators, building on work inititated back in the day by Guy Steele. You can find an extensive report and nice, working Scheme code here.

Another venue to flexibility mentioned in the talk are constraint propagation networks in which multiple sources contribute to defining the values of each state variable. You get that way the possibility of partially defined values, that can be nonetheless useful by themselves, depending on the computation you’re performing. Propagator networks also work as additive computation machines able to refine coarse inputs into correct solutions for problems specified as a set of constraints. One of Sussman’s students, Alexey Radu, is actively working on propagators, building on work inititated back in the day by Guy Steele. You can find an extensive report and nice, working Scheme code here.

Finally, Gerry gave a lightning talk with yet another piece of food for thought. The rub of it was drawing our attention to the possibility of exploiting a posited parallelism between the theory and methods to solve differential equations on the one hand, and programs on the other. There’s a way of approaching solving a differential equation that is, if you will, algebraic in nature: one manipulates algebraically expressions to simplify and eventually obtain a closed form solution, or, if that’s not possible, creates numerical approximations to evolve the boundary conditions in the state space as a function of discrete time steps. You end up that way with something that works as a solution, but, most of the time, without a deep understanding of the traits that make it a solution: in the spirit of the robust design ideas sketched before, we should probably be asking for more qualitative information about how solutions behave as we change the boundary or initial conditions of our problem. As it happens, matematicians have a way of analyzing the behaviour of solutions to differential equations by studying their Poincaré maps and sections, which are views into the orbits followed by the solutions in their state space. Many times, you don’t have to solve exactly the differential equation to predict its qualitative behaviour (e.g., is it bounded? is it periodic?) in state space, and get insight on how it changes in presence of perturbations. The analogy with computing processes is clear: most of the time, we narrow our efforts in finding, so to speak, algebraic solutions to our computing problems: the program-as-text is the analog to the process of finding an exact formula for the solution of a differential equation. What we’re missing, according to Sussman, is a way or reasoning about the qualitative features of our programs à la Poincaré, i.e., a way or reasoning about programs in the state space of their outputs, beyond the mechanistic algorithmical details. Gerry admitted that he didn’t know how or in what form this kind of reasoning would proceed, only that his hunch is that it must possible, and exhorted us to find the way.

Finally, Gerry gave a lightning talk with yet another piece of food for thought. The rub of it was drawing our attention to the possibility of exploiting a posited parallelism between the theory and methods to solve differential equations on the one hand, and programs on the other. There’s a way of approaching solving a differential equation that is, if you will, algebraic in nature: one manipulates algebraically expressions to simplify and eventually obtain a closed form solution, or, if that’s not possible, creates numerical approximations to evolve the boundary conditions in the state space as a function of discrete time steps. You end up that way with something that works as a solution, but, most of the time, without a deep understanding of the traits that make it a solution: in the spirit of the robust design ideas sketched before, we should probably be asking for more qualitative information about how solutions behave as we change the boundary or initial conditions of our problem. As it happens, matematicians have a way of analyzing the behaviour of solutions to differential equations by studying their Poincaré maps and sections, which are views into the orbits followed by the solutions in their state space. Many times, you don’t have to solve exactly the differential equation to predict its qualitative behaviour (e.g., is it bounded? is it periodic?) in state space, and get insight on how it changes in presence of perturbations. The analogy with computing processes is clear: most of the time, we narrow our efforts in finding, so to speak, algebraic solutions to our computing problems: the program-as-text is the analog to the process of finding an exact formula for the solution of a differential equation. What we’re missing, according to Sussman, is a way or reasoning about the qualitative features of our programs à la Poincaré, i.e., a way or reasoning about programs in the state space of their outputs, beyond the mechanistic algorithmical details. Gerry admitted that he didn’t know how or in what form this kind of reasoning would proceed, only that his hunch is that it must possible, and exhorted us to find the way.

ILC09 would have been worth it only for those talks, but there was much more: don’t miss the next one!

–

[0] As rightly pointed out by Dan Weinreb in his comment below, Sussman endorses the changes in the new curriculum, though. His post on this issue is worth reading too.

Finally, Gerry gave a lightning talk with yet another piece of food for thought. The rub of it was drawing our attention to the possibility of exploiting a posited parallelism between the theory and methods to solve differential equations on the one hand, and programs on the other. There’s a way of approaching solving a differential equation that is, if you will, algebraic in nature: one manipulates algebraically expressions to simplify and eventually obtain a closed form solution, or, if that’s not possible, creates numerical approximations to evolve the boundary conditions in the state space as a function of discrete time steps. You end up that way with something that works as a solution, but, most of the time, without a deep understanding of the traits that make it a solution: in the spirit of the robust design ideas sketched before, we should probably be asking for more qualitative information about how solutions behave as we change the boundary or initial conditions of our problem. As it happens, matematicians have a way of analyzing the behaviour of solutions to differential equations by studying their Poincaré

Finally, Gerry gave a lightning talk with yet another piece of food for thought. The rub of it was drawing our attention to the possibility of exploiting a posited parallelism between the theory and methods to solve differential equations on the one hand, and programs on the other. There’s a way of approaching solving a differential equation that is, if you will, algebraic in nature: one manipulates algebraically expressions to simplify and eventually obtain a closed form solution, or, if that’s not possible, creates numerical approximations to evolve the boundary conditions in the state space as a function of discrete time steps. You end up that way with something that works as a solution, but, most of the time, without a deep understanding of the traits that make it a solution: in the spirit of the robust design ideas sketched before, we should probably be asking for more qualitative information about how solutions behave as we change the boundary or initial conditions of our problem. As it happens, matematicians have a way of analyzing the behaviour of solutions to differential equations by studying their Poincaré