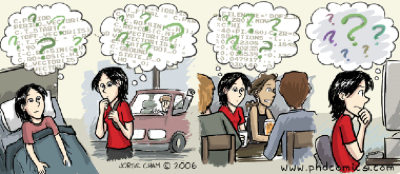

Last week at work was a tough one. I basically looked like this:

Except that i’m not a girl and wear longer hair, the picture is quite accurate: nothing seemed to work and, of course, we had a deadline. To give you a bit more background, this is an embedded application controlling data gathering from scientific instruments to flight in a satellite called LISA Pathfinder (see this older post if you’re curious). Fortunately, my struggles seem to be over now , but i took some notes just after the fact, trying to capture the key bad practices that had conducted me to a near disaster. I thought it might be interesting to share some musings on my mistakes, if only to make sure i avoid them in the future. Here they are.

We have a special communications hardware in place, and i’ve been in charge of developing its drivers and protocol stack, implemented in the layered way we’ve been taught in our networking courses: hardware, data-link, transport and application layers.

Everything seemed to run smoothly with the first protocol version, but of course customers are never happy with first versions and we soon had a protocol redefinition, affecting the data-link and transport layers. And oh, the deadline: i just re-implemented the stack without doing any test. Yeah, i’m blushing, but i had no choice. Or had i? Well, i was following orders, as they say, and my boss had no choice either. I could have refused the orders, and made everybody up the ladder reconsider their plans. Problem is, those plans spring from promises to customers, and your boss is not going to reconsider anything unless you stubbornly (and probably wisely) insist in not doing a bad job. In which case you may get fired. But, if you can afford that risk, i think that’s the most honest and best thing to do. I didn’t do that. I have friends in this project, and leaving would jeopardize its completion. No way. Second best is to say yes, sir and test your software anyway. You’ll miss the deadline, but then, you’ll miss it anyway if you don’t write tests (unless you’re younger and wiser than i am). I didn’t do that, either. Blame on me. What i did was instead jump into the impossible task, knowing all too well that it wouldn’t work (which makes me feel even more stupid). What i did get right, for a change, was gloomily forewarning every one of the upcoming problems. If you want my advice, that’s the absolute minimum you should do in a situation like this one. When things go awry, it may save your, er, position.

So there i was, with my untested protocol stack exploding at the slightest contact with an externally sent message coming up our communications card. Remember that this is embedded software with no operating system below, so i had no ‘Access violation’ dialogs ready to take me into a debugger. Fortunately, we’re using design by contract tactics: all preconditions and some consistency predicates are duly asserted, and, whenever they fail, a black-and-white equivalent to the BSoD springs out the board’s serial port with a detailed report of the CPU’s states at the catastrophe’s time. That caught a few obvious mistakes (as had done in previous versions) and deserved the first entry in my lessons learned notebook: design by contract works for us.

After some fixes, the program was able to enter smoothly in its idle event loop, which was actually bad news: it was running too smoothly, to the point of simply ignoring any incoming network message. No assertion failure, no nothing. There began the odyssey: the only solution was to read my code and, slowly but surely, write all those missing unit tests. Second lesson: writing unit tests is far easier while developing the software under test that after the fact. And, more importantly, unit tests are you safety net for refactoring. In my experience, refactoring is an integral part of software development. Albeit i think that some design at the architectural level is needed before jumping to Emacs, it’s only i’ve written a couple of half-implementations of the desired functionality that the appropriate design comes out to the light. In my opinion, trying to write a detailed design out of the blue is a waste of time: bottom-up works nicely as a way to discover the design and fix the initial (and necessary) top-down architecture. I like to call this process bottom-top development.

So, i was soon re-implementing one of my modules, which, now that i looked at a second time, was unduly complex. This is also old hat: only after a first implementation (or, sometimes, a few of them) do i understand the problem well enough to write a simple one. Not that i’m discovering anything new here:

A designer knows he has achieved perfection not when there is nothing left to add, but when there is nothing left to take away. – Antoine de Saint-Exupery.

and, of course,

Plan to throw one away. You will do that, anyway. Your only choice is whether to try to sell the throwaway to customers. – Frederick Brooks.

Only that this time it was taking me more time than necessary: when you refactor, you want tests to tell you that every step in the process isn’t breaking anything. Obviously, i was breaking something, for my program wouldn’t work, refactoring or not. More tests, and more reading my code once and again. I knew i was overwriting memory somewhere in there (as it came out, i was doing that in more than one place), and the only problem was to find where. In the process, i jotted down a couple more observations on the sources of my troubles.

Everybody knows that appropriate naming of objects in a program is important. As i grow older, appropriate naming is growing more important to me. For instance, in this case i was debugging a piece of code dealing with (among other stuff) circular buffers. One of my buffer handling ADTs had a member recording the size of a buffer: i had called that variable size. That seems OK, except for the fact that we deal with two kinds of buffers, one containing bytes and one containg 16-bit words. I had a couple of nasty bugs that i was not detecting because, when reading the code, i was thinking of size as the number of bytes in the buffer. As it came out, word_number would have been a far better name! When you’re writing the code, it’s easy to remember the exact meaning of every variable, and sloppy naming seems not an issue. But when you come back to debug your code, it’s easy to misremember the meaning of a sloppy name. Third lesson re-learnt: appropriate naming is harder than it seems, and worthier.

Only by the end of my struggles did i use a debugger (in the development machine, we don’t have a way to connect a remote debugger to the deployment board). But it helped to nail down the final details. That made me think. When i started programming professionally, i was using Visual Studio 4 (or something) and did use the debugger a lot. As time passed and i learned and honed my skills, the debugger was less and less necessary. I found that understanding my programs and reasoning about their operation was more beneficial than relying in the easy way out of stepping through my code blindly. I remember how my co-workers use to make fun saying that i was running the program in my head when i was trying to find why a program of mine was failing. As a result, i had an excellent understanding of the code i wrote, and at first abandoning debuggers made me a better programmer. So, i’m a firm believer of the theory that says that excessive use of debuggers spoils programmers. But that does not mean that debuggers are always harmful. My mistake had been to forget that they exist at all! On the contrary, they’re one of the tools of the trade, and may be quite useful at times. As in this case, where i was having out-of-bounds accesses to memory due to a misplaced offset. I knew that somewhere i was overwriting memory, but it took quite a bit to find the culprit. A watchpoint in the debugger would have caught it in a breeze. So one more note-to-self: stop being an i-don’t-need-no-stinkin’-debugger macho programmer, and use the right tools in each situation.

Happily, my implementation seems to be working again, and the world, unsurprisingly, didn’t stop spinning due to yet another unmet deadline. After all the hassle, i know for sure that i don’t want to spend my time dealing with low-level details that a higher-level language and runtime can handle better than me (i’ve been feeling quite a bit like this guy), even in an embedded setting: next time, i will evaluate more carefully alternatives like Hedgehog or Tinyscheme. A more depressing thought is how basic my mistakes were: every single observation in this post should be self-evident to any seasoned programmer, and after almost ten years in the trenches i should have known better. Either i’m particularly stupid or we should be aware how much easier it is to preach than to actually live up to our own advice. All in all, i feel i’m getting older, and i’m not sure about the wiser bit.

Update: Talking about debuggers, here‘s an interesting article on how to get the most of macros in gdb. The article is a followup of a previous one on how gdb and strace work together.

October 9, 2006 at 4:37 pm

Glad to read you again Jao!

I tend to have the same attitude you have towards debuggers, except when I’m coding in a language with no garbage collection support and no buffer-overflow detection. I found the combination gdb+valgrind to be quite efficient in this situation.

In my experience, writing unit tests always pays off, no matter what the term. The time spent writing the test far outweight the time spent wondering what is wrong… but you obviously know that!

October 9, 2006 at 9:45 pm

Debugger usage has a bit of a catch-22 on it though: the less you use the debugger, the better you get, but when you do need the debugger, you can’t use it because you’re out of practice.

Well, that’s how it is for me anyway.